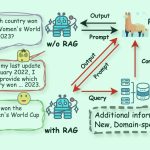

Retrieval-Augmented Era (RAG) is an method to constructing AI techniques that mixes a language mannequin with an exterior information supply. In easy phrases, the AI first searches for related paperwork (like articles or webpages) associated to a consumer’s question, after which makes use of these paperwork to generate a extra correct reply. This methodology has been celebrated for serving to giant language fashions (LLMs) keep factual and cut back hallucinations by grounding their responses in actual information.

Intuitively, one may suppose that the extra paperwork an AI retrieves, the higher knowledgeable its reply will likely be. Nevertheless, latest analysis suggests a stunning twist: in relation to feeding data to an AI, generally much less is extra.

Fewer Paperwork, Higher Solutions

A brand new research by researchers on the Hebrew College of Jerusalem explored how the quantity of paperwork given to a RAG system impacts its efficiency. Crucially, they saved the full quantity of textual content fixed – that means if fewer paperwork have been offered, these paperwork have been barely expanded to fill the identical size as many paperwork would. This manner, any efficiency variations may very well be attributed to the amount of paperwork reasonably than merely having a shorter enter.

The researchers used a question-answering dataset (MuSiQue) with trivia questions, every initially paired with 20 Wikipedia paragraphs (just a few of which really include the reply, with the remainder being distractors). By trimming the variety of paperwork from 20 down to simply the two–4 actually related ones – and padding these with a bit of additional context to take care of a constant size – they created eventualities the place the AI had fewer items of fabric to think about, however nonetheless roughly the identical complete phrases to learn.

The outcomes have been putting. Usually, the AI fashions answered extra precisely once they got fewer paperwork reasonably than the complete set. Efficiency improved considerably – in some situations by as much as 10% in accuracy (F1 rating) when the system used solely the handful of supporting paperwork as an alternative of a giant assortment. This counterintuitive increase was noticed throughout a number of totally different open-source language fashions, together with variants of Meta’s Llama and others, indicating that the phenomenon will not be tied to a single AI mannequin.

One mannequin (Qwen-2) was a notable exception that dealt with a number of paperwork and not using a drop in rating, however virtually all of the examined fashions carried out higher with fewer paperwork total. In different phrases, including extra reference materials past the important thing related items really damage their efficiency extra usually than it helped.

Supply: Levy et al.

Why is that this such a shock? Sometimes, RAG techniques are designed below the belief that retrieving a broader swath of knowledge can solely assist the AI – in any case, if the reply isn’t within the first few paperwork, it is likely to be within the tenth or twentieth.

This research flips that script, demonstrating that indiscriminately piling on further paperwork can backfire. Even when the full textual content size was held fixed, the mere presence of many alternative paperwork (every with their very own context and quirks) made the question-answering job more difficult for the AI. It seems that past a sure level, every further doc launched extra noise than sign, complicated the mannequin and impairing its capacity to extract the proper reply.

Why Much less Can Be Extra in RAG

This “much less is extra” outcome is sensible as soon as we contemplate how AI language fashions course of data. When an AI is given solely essentially the most related paperwork, the context it sees is targeted and freed from distractions, very similar to a scholar who has been handed simply the correct pages to review.

Within the research, fashions carried out considerably higher when given solely the supporting paperwork, with irrelevant materials eliminated. The remaining context was not solely shorter but in addition cleaner – it contained info that instantly pointed to the reply and nothing else. With fewer paperwork to juggle, the mannequin may commit its full consideration to the pertinent data, making it much less prone to get sidetracked or confused.

Alternatively, when many paperwork have been retrieved, the AI needed to sift via a mixture of related and irrelevant content material. Usually these further paperwork have been “comparable however unrelated” – they could share a subject or key phrases with the question however not really include the reply. Such content material can mislead the mannequin. The AI may waste effort attempting to attach dots throughout paperwork that don’t really result in an accurate reply, or worse, it would merge data from a number of sources incorrectly. This will increase the chance of hallucinations – situations the place the AI generates a solution that sounds believable however will not be grounded in any single supply.

In essence, feeding too many paperwork to the mannequin can dilute the helpful data and introduce conflicting particulars, making it more durable for the AI to resolve what’s true.

Apparently, the researchers discovered that if the additional paperwork have been clearly irrelevant (for instance, random unrelated textual content), the fashions have been higher at ignoring them. The true hassle comes from distracting information that appears related: when all of the retrieved texts are on comparable subjects, the AI assumes it ought to use all of them, and it might battle to inform which particulars are literally essential. This aligns with the research’s commentary that random distractors brought about much less confusion than practical distractors within the enter. The AI can filter out blatant nonsense, however subtly off-topic data is a slick entice – it sneaks in below the guise of relevance and derails the reply. By lowering the variety of paperwork to solely the actually mandatory ones, we keep away from setting these traps within the first place.

There’s additionally a sensible profit: retrieving and processing fewer paperwork lowers the computational overhead for a RAG system. Each doc that will get pulled in must be analyzed (embedded, learn, and attended to by the mannequin), which makes use of time and computing sources. Eliminating superfluous paperwork makes the system extra environment friendly – it may well discover solutions quicker and at decrease value. In eventualities the place accuracy improved by specializing in fewer sources, we get a win-win: higher solutions and a leaner, extra environment friendly course of.

Supply: Levy et al.

Rethinking RAG: Future Instructions

This new proof that high quality usually beats amount in retrieval has essential implications for the way forward for AI techniques that depend on exterior information. It means that designers of RAG techniques ought to prioritize good filtering and rating of paperwork over sheer quantity. As an alternative of fetching 100 doable passages and hoping the reply is buried in there someplace, it might be wiser to fetch solely the highest few extremely related ones.

The research’s authors emphasize the necessity for retrieval strategies to “strike a stability between relevance and variety” within the data they provide to a mannequin. In different phrases, we need to present sufficient protection of the subject to reply the query, however not a lot that the core info are drowned in a sea of extraneous textual content.

Transferring ahead, researchers are prone to discover methods that assist AI fashions deal with a number of paperwork extra gracefully. One method is to develop higher retriever techniques or re-rankers that may determine which paperwork actually add worth and which of them solely introduce battle. One other angle is enhancing the language fashions themselves: if one mannequin (like Qwen-2) managed to deal with many paperwork with out shedding accuracy, inspecting the way it was skilled or structured may supply clues for making different fashions extra strong. Maybe future giant language fashions will incorporate mechanisms to acknowledge when two sources are saying the identical factor (or contradicting one another) and focus accordingly. The aim can be to allow fashions to make the most of a wealthy number of sources with out falling prey to confusion – successfully getting the perfect of each worlds (breadth of knowledge and readability of focus).

It’s additionally value noting that as AI techniques acquire bigger context home windows (the power to learn extra textual content directly), merely dumping extra information into the immediate isn’t a silver bullet. Greater context doesn’t mechanically imply higher comprehension. This research exhibits that even when an AI can technically learn 50 pages at a time, giving it 50 pages of mixed-quality data could not yield outcome. The mannequin nonetheless advantages from having curated, related content material to work with, reasonably than an indiscriminate dump. In reality, clever retrieval could develop into much more essential within the period of large context home windows – to make sure the additional capability is used for helpful information reasonably than noise.

The findings from “Extra Paperwork, Similar Size” (the aptly titled paper) encourage a re-examination of our assumptions in AI analysis. Typically, feeding an AI all the info we now have will not be as efficient as we expect. By specializing in essentially the most related items of knowledge, we not solely enhance the accuracy of AI-generated solutions but in addition make the techniques extra environment friendly and simpler to belief. It’s a counterintuitive lesson, however one with thrilling ramifications: future RAG techniques is likely to be each smarter and leaner by fastidiously selecting fewer, higher paperwork to retrieve.