Cerebras Methods introduced on Tuesday that it is made Meta Platforms’s Llama carry out as effectively in a small model because it does on a big model by including the more and more widespread method in generative synthetic intelligence (AI) often known as “chain of thought.” The AI pc maker introduced the advance in the beginning of the annual NeurIPS convention on AI.

“It is a closed-source solely functionality, however we needed to carry this functionality to the preferred ecosystem, which is Llama,” stated James Wang, head of Cerebras’s product advertising effort, in an interview with ZDNET.

The undertaking is the most recent in a line of open-source tasks Cerebras has finished to reveal the capabilities of its purpose-built AI pc, the “CS-3,” which it sells in competitors with the established order in AI — GPU chips from the customary distributors, Nvidia and AMD.

The corporate was capable of practice the Llama 3.1 open-source AI mannequin that makes use of solely 70 billion parameters to achieve the identical accuracy or higher accuracy on varied benchmark checks because the a lot bigger 405-billion parameter model of Llama.

These checks embody the CRUX check of “complicated reasoning duties,” developed at MIT and Meta, and the LiveCodeBench for code era challenges, developed at U.C. Berkeley, MIT and Cornell College, amongst others.

Chain of thought can allow fashions utilizing much less coaching time, information, and computing energy, to equal or surpass a big mannequin’s efficiency.

“Primarily, we’re now beating Llama 3.1 405B, a mannequin that is some seven instances bigger, simply by pondering extra at inference time,” stated Wang.

The thought behind chain-of-thought processing is for the AI mannequin to element the sequence of calculations carried out in pursuit of the ultimate reply, to attain “explainable” AI. Such explainable AI might conceivably give people larger confidence in AI’s predictions by disclosing the idea for solutions.

OpenAI has popularized the chain-of-thought method with its just lately launched “o1” giant language mannequin.

Cerebras’s reply to o1, dubbed “Cerebras Planning and Optimization,” or CePO, operates by requiring Llama — on the time the immediate is submitted — to “produce a plan to resolve the given drawback step-by-step,” perform the plan repeatedly, analyze the responses to every execution, after which choose a “better of” reply.

“Not like a standard LLM, the place the code is simply actually token by token by token, it will have a look at its personal code that it generated and see, does it make sense?” Wang defined. “Are there syntax errors? Does it truly accomplish what the particular person asks for? And it’ll run this sort of logic loop of plan execution and cross-checking a number of instances.”

Along with matching or exceeding the 405B mannequin of Llama 3.1, Cerebras was capable of take the most recent Llama model, 3.3, and make it carry out on the degree of “frontier” giant language fashions comparable to Anthropic’s Claude 3.5 Sonnet and OpenAI’s GPT-4 Turbo.

“That is the primary time, I believe, anybody has taken a 70B mannequin, which is usually thought-about medium-sized, and achieved a frontier-level efficiency,” stated Wang.

Humorously, Cerebras additionally put Llama to the “Strawberry Check,” a immediate that alludes to the “strawberry” code identify for OpenAI’s o1. When the numbers of “r” are multiplied, comparable to “strrrawberrry,” and language fashions are prompted to inform the variety of r’s, they usually fail. The Llama 3.1 was capable of precisely relate various numbers of r’s utilizing chain of thought.

From a company perspective, Cerebras is keen to reveal the {hardware} and software program benefit of its AI pc, the CS-3.

The work on Llama was finished on CS-3s utilizing Cerebras’s WSE3 chip, the world’s largest semiconductor. The corporate was capable of run the Llama 3.1 70B mannequin, in addition to the newer Llama 3.3, on chain of thought with out the everyday lag induced in o1 and different fashions operating on Nvidia and AMD chips, stated Wang.

The chain-of-thought model of three.1 70B is “the one reasoning mannequin that runs in actual time” when operating on the Cerebras CS-3s, the corporate claims. “OpenAI reasoning mannequin o1 runs in minutes; CePO runs in seconds.”

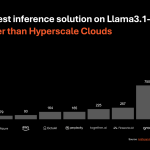

Cerebras, which just lately launched what it calls “the world’s quickest inference service,” claims the CS-3 machines are 16 instances quicker than the quickest service on GPU chips, at 2,100 tokens processed each second.

Cerebras’s experiment helps a rising sense that not solely the coaching of AI fashions but in addition the making of predictions in manufacturing, is scaling to ever bigger computing wants as prompts develop into extra complicated.

Generally, stated Wang, the accuracy of enormous language fashions will enhance in proportion to the quantity of compute used, each in coaching and in inference; nevertheless, the issue by which the efficiency improves will fluctuate relying on what method is utilized in every case.

“Totally different strategies will scale with compute by totally different levels,” stated Wang. “The slope of the strains will probably be totally different. The outstanding factor — and why scaling legal guidelines are talked about — is the truth that it scales in any respect, and seemingly with out finish.”

“The classical view was that enhancements would plateau and also you would wish algorithmic breakthroughs,” he stated. “Scaling legal guidelines say, ‘No, you’ll be able to simply throw extra compute at it with no sensible restrict.’ The kind of neural community, reasoning technique, and so on. impacts the speed of enchancment, however not its scalable nature.”

In several implementations, chain of thought can output both a verbose collection of its intermediate outcomes or a type of standing message saying one thing like “pondering.” Requested which Cerebras opted for, Wang stated that he had not himself seen the precise output, however that “it is in all probability verbose. Once we launch stuff that is designed to serve Llama and open-source fashions, individuals prefer to see the intermediate outcomes.”

Also on Tuesday, Cerebras introduced it has proven “preliminary” coaching of a big language mannequin that has one trillion parameters, in a analysis undertaking carried out with Sandia Nationwide Laboratories, a laboratory run by the US Division of Power.

The work was finished on a single CS-3, mixed with its purpose-built reminiscence pc, the MemX. A particular model of the MemX was boosted to 55 terabytes of reminiscence to carry the parameter weights of the mannequin, which had been then streamed to the CS-3 over Cerebras’ devoted networking pc, the SwarmX.

The CS-3 system, Cerebras claims, would change 287 of Nvidia’s top-of-the-line “Grace Blackwell 200” combo CPU and GPU chips which can be wanted in an effort to entry equal reminiscence.

The mixture of the one CS-3 and the MemX takes up two commonplace telco racks of kit, stated Wang. The corporate claims that this takes lower than one p.c of the house and energy of the equal GPU association.

The MemX gadget makes use of commodity DRAM, often known as DDR-5, in distinction to the GPU playing cards which have dearer “high-bandwidth reminiscence,” or, HBM.

“It doesn’t contact the HBM provide chain so it is extraordinarily straightforward to acquire, and it is cheap,” stated Wang.

Cerebras is betting the true payoff is within the programming mannequin. To program the a whole bunch of GPUs in live performance, stated Wang, a complete of 20,507 strains of code are wanted to coordinate an AI fashions’ Python, C, and C++ and shell code, and different assets. The identical job may be carried out on the CS-3 machine with 565 strains of code.

“This isn’t only a want from a {hardware} perspective, it is a lot less complicated from a programming perspective,” he stated, “as a result of you’ll be able to drop this trillion-parameter mannequin immediately into this block of reminiscence,” whereas the GPUs contain “managing” throughout “1000’s of 80-gigabyte blocks” of HBM reminiscence to coordinate parameters.

The analysis undertaking skilled the AI mannequin, which isn’t disclosed, throughout 50 coaching steps, although it didn’t but practice it to “convergence,” that means, to a completed state. To coach a trillion-parameter mannequin to convergence would require many extra machines and extra time.

Nonetheless, Cerebras subsequently labored with Sandia to run the coaching on 16 of the CS-3 machines. Efficiency elevated in a “linear” style, stated Wang, whereby coaching accuracy will increase in proportion to the variety of computer systems put into the cluster.

“The GPU has all the time claimed linear scaling, nevertheless it’s very, very troublesome to attain,” stated Wang. “The entire level of our wafer-scale cluster is that as a result of reminiscence is that this unified block, compute is separate, and now we have a cloth in between, you do not need to fret about that.”

Though the work with Sandia didn’t practice the mannequin to convergence, such large-model coaching “is essential to our clients,” stated Wang. “That is actually the first step earlier than you do a big run which prices a lot cash,” that means, full convergence, he stated.

One of many firm’s largest clients, funding agency G42 of the United Arab Emirates, “could be very a lot motivated to attain a world-class end result,” he stated. “They need to practice a really, very giant mannequin.”

Sandia will in all probability publish on the experiment once they have some “closing outcomes,” stated Wang.

The NeurIPS convention is among the premier occasions in AI, usually that includes the primary public disclosure of breakthroughs. The complete schedule for the one-week occasion may be discovered on the NeurIPS Web page.