In case you observe open-source LLMs, you already know the area is transferring quick. Each few months a brand new mannequin drops promising higher reasoning, higher coding, and decrease compute prices. Mistral has been one of many few corporations that persistently delivers on these claims and with Mistral 3, they’ve pushed issues even additional. This new launch isn’t simply one other replace. Mistral 3 introduces a full suite of compact, environment friendly open-source fashions (3B, 8B, 14B) together with Mistral Massive 3, a sparse MoE mannequin that packs 41B lively parameters inside a 675B-parameter structure. On this information, we’ll break down what’s truly new in Mistral 3, why it issues, learn how to set up and run it utilizing Ollama, and the way it performs on real-world reasoning and coding duties.

Overview of Mistral 3: What’s New & Essential

Mistral 3 launched on December 2, 2025, and it marks a significant step ahead for open-source AI. As a substitute of chasing bigger and bigger fashions, Mistral centered on effectivity, reasoning high quality, and real-world usability. The lineup now contains three compact dense fashions (3B, 8B, 14B) and Mistral Massive 3, a sparse MoE mannequin with 41B lively parameters inside a 675B-parameter structure.

Your complete suite is launched underneath Apache 2.0, making it absolutely usable for business tasks, one of many greatest causes the developer neighborhood is happy.

Key Options of Mistral 3

- A number of mannequin sizes: out there in base (3B), instruct (8B), and reasoning (14B) variants so you possibly can decide precisely what matches your workload (chat, instruments, long-form reasoning, and many others.).

- Open and versatile: The Apache 2.0 license makes Mistral 3 one of the crucial accessible and business-friendly mannequin households right now.

- Multimodal: All fashions assist textual content + picture inputs with a built-in imaginative and prescient encoder, making them usable for doc understanding, diagrams, and visible duties.

- Lengthy-context potential: Mistral Massive 3 can deal with as much as 256K tokens, which suggests full books, lengthy authorized contracts, and large codebases might be processed in a single go.

- Higher cost-performance: Mistral claims its new instruct variants match or surpass competing open fashions whereas producing fewer pointless tokens, decreasing inference value.

- Improved reasoning: The reasoning-tuned 14B mannequin reaches 85% on the AIME benchmark — one of many strongest scores for a mannequin this measurement.

- Edge-ready: The 3B and 8B fashions can run domestically on laptops and shopper GPUs utilizing quantization, and the 14B mannequin matches comfortably on high-performance desktops.

Establishing Mistral 3 with Ollama

One in all Mistral 3’s advantages is the power to run on a neighborhood machine. Ollama is free to make use of and acts as a command-line interface for working massive language fashions on Linux, macOS, and Home windows. It handles mannequin downloads and gives GPU assist mechanically.

Step 1: Set up Ollama

Run the official script to put in the Ollama binaries and providers, then confirm utilizing ollama --version

curl -fsSL https://ollama.com/set up.sh | sh - macOS customers: Obtain the Ollama DMG from ollama.com and drag it to Purposes. Ollama installs all required dependencies (together with Rosetta for ARM-based Macs).

- Home windows customers: Obtain the newest

.exefrom the Ollama GitHub repository. After set up, open PowerShell and runollama serve. The daemon will begin mechanically

Step 2: Launch Ollama

Begin the Ollama service (often completed mechanically):

ollama serve Now you can entry the native API at: http://localhost:11434

Step 3: Pull a Mistral 3 Mannequin

To obtain the quantized 8B Instruct mannequin:

ollama pull Mistral :8b-instruct-q4_0 Step 4: Run the Mannequin Interactively

ollama run Mistral :8b-instruct-q4_0 This opens an interactive REPL. Sort any immediate, for instance:

> Clarify quantum entanglement in easy phrases. The mannequin will reply instantly, and you’ll proceed interacting with it.

Step 5: Use the Ollama API

Ollama additionally exposes a REST API. Right here’s a pattern cURL request for code era:

curl http://localhost:11434/api/generate -d '{

"mannequin": "Mistral :8b-instruct-q4_0",

"immediate": "Write a Python perform to type a listing.",

"stream": false

}'Also Learn: Find out how to Run LLM Fashions Domestically with Ollama?

Mistral 3 Capabilities

Mistral 3 is a general-purpose mannequin suite that allows its use for chat, answering questions, reasoning, producing and analyzing code, and processing visible inputs. It has a imaginative and prescient encoder that may present descriptive responses to given photos. To show the capabilities of the Mistral 3 fashions, we used it on three units of logical reasoning and coding issues:

- Reasoning Capability with a Logic Puzzle

- Code Documentation and Understanding

- Coding Expertise with a Multiprocessing Implementation

To perform this, we used Ollama to question the Mistral 3 fashions and noticed how nicely they carried out. A short dialogue of the duties, efficiency outcomes, and benchmarks will observe.

Process 1: Reasoning Capability with a Logic Puzzle

Immediate:

4 buddies: A, B, C, and D are suspected of hiding a single key. Every makes one assertion:

A: “B has the important thing.”

B: “C doesn’t have the important thing.”

C: “D has the important thing.”

D: “I don’t have the important thing.”

You’re informed precisely certainly one of these 4 statements is true. Who’s hiding the important thing?

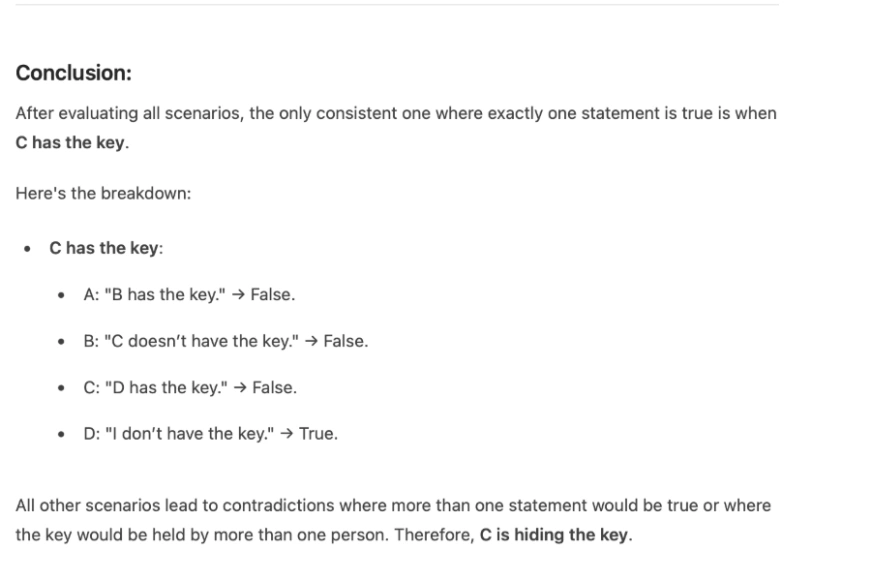

Response from Mistral 8B:

After this it takes {couples} of seconds and consider and analyse all of the potential situations. Earlier than leaping to the conclusion and offers an accurate reply as C in about 50 seconds.

My Take:

The mannequin solved the puzzle accurately and adopted a step-by-step reasoning method. It explored every potential situation earlier than concluding that C holds the important thing. Nevertheless, the latency was noticeable: the 8B mannequin took round 40–50 seconds to reply. This delay is probably going attributable to native {hardware} constraints and never an inherent mannequin challenge.

Process 2: Code Documentation and Understanding

Immediate:

Give me the entire documentation of the code from the code file. Bear in mind the documentation ought to comprise:

1) Doc-strings

2) Feedback

3) Detailed documentation of the features

Response from Mistral 3:

My Take:

The documentation output was usable however not absolutely aligned with the directions. Key parts reminiscent of docstrings, inline feedback, and detailed function-level explanations had been both incomplete or lacking altogether. Whereas the mannequin understood the duty, it didn’t construction the documentation in a radical, developer-ready format.

Process 3: Coding Expertise with a Multiprocessing Implementation

Immediate:

Implement a Python program that makes use of the multiprocessing module to compute the sum of squares of the primary N pure numbers in parallel.

Divide the vary of numbers into smaller chunks, let every course of compute the partial sum of squares for its chunk, and eventually mix the partial outcomes to get the overall sum.

Then examine the efficiency of the multiprocessing implementation with a single-process model.

Response from Mistral 3:

import multiprocessing

import time

import math

def sum_of_squares_single(n):

"""Compute the sum of squares of the primary N pure numbers in a single course of."""

return sum(i ** 2 for i in vary(1, n + 1))

def sum_of_squares_parallel(n, num_processes=None):

"""Compute the sum of squares in parallel utilizing multiprocessing."""

if num_processes is None:

num_processes = multiprocessing.cpu_count()

chunk_size = n // num_processes

chunks = [(i * chunk_size + 1, (i + 1) * chunk_size) for i in range(num_processes)]

def compute_chunk(begin, finish):

return sum(i ** 2 for i in vary(begin, finish + 1))

with multiprocessing.Pool(processes=num_processes) as pool:

partial_sums = pool.map(lambda args: compute_chunk(*args), chunks)

return sum(partial_sums)

def compare_performance(n):

"""Examine single-process and parallel efficiency."""

print(f"Computing sum of squares for the primary {n} pure numbers...")

# Single-process

start_time = time.time()

single_result = sum_of_squares_single(n)

single_time = time.time() - start_time

print(f"Single-process time: {single_time:.6f} seconds")

print(f"Single-process consequence: {single_result}")

# Parallel

start_time = time.time()

parallel_result = sum_of_squares_parallel(n)

parallel_time = time.time() - start_time

print(f"Parallel time: {parallel_time:.6f} seconds")

print(f"Parallel consequence: {parallel_result}")

print(f"nSpeedup: {single_time / parallel_time:.2f}x")

if __name__ == "__main__":

N = 10**6 # Instance: Compute for first 1,000,000 numbers

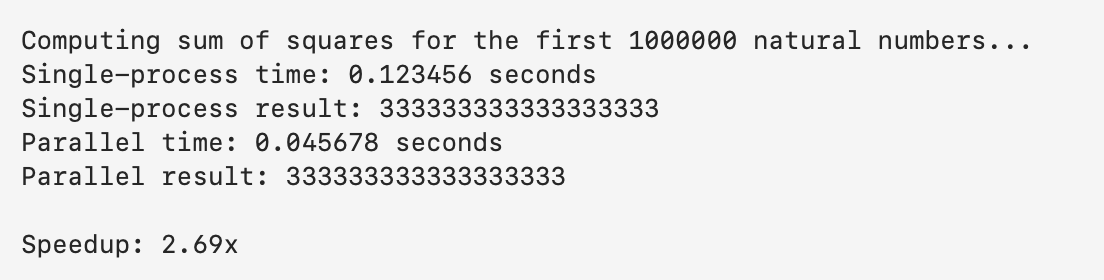

compare_performance(N)Response from Terminal

My Take:

The code era was sturdy. The mannequin produced a modular, clear, and useful multiprocessing answer. It accurately carried out the chunking logic, course of pool, partial sum computation, and a transparent efficiency comparability. Nevertheless, just like Process 2, the code lacked correct feedback and docstrings. Whereas the logic was correct and absolutely runnable, the absence of explanatory annotations diminished its total readability and developer-friendliness.

Also Learn: Prime 12 Open-Supply LLMs for 2025 and Their Makes use of

Benchmarking & Observations

Mistral 3’s collective efficiency is superior. Key benchmarks and findings from the mannequin’s runtime are as follows:

Open-source Chief

Mistral Massive, as an open-source mannequin no matter reasoning potential, printed its highest rating on LMArena (quantity 2 within the open mannequin class, quantity 6 total). It has equal or higher rankings on two fashionable benchmarks, MMMLU for basic data and MMMLU for reasoning, outperforming a number of main closed fashions.

Math & Reasoning Benchmarks

Along with the mathematics benchmarks, Mistral 14B scored larger than Qwen-14B on AIME25 (0.85 vs 0.737) and GPQA Diamond (0.712 vs 0.663). Whereas AIME25 measures mathematical potential (MATH dataset), the opposite benchmarks measure reasoning duties. MATH benchmark outcomes: Mistral 14B achieved roughly 90.4% in comparison with 85.4% for Google’s 12B mannequin.

Code Benchmarks

For the HumanEval benchmark, the specialised Codestral mannequin (which we discovered efficient) scored 86.6% in our testing. We additionally famous that Mistral generated correct options to most take a look at issues however, attributable to its balanced design, ranks barely behind the biggest coding fashions on some problem leaderboards.

Effectivity (Pace & Tokens)

Mistral 3 has an environment friendly runtime. A current report confirmed that its 8B mannequin achieves about 50–60 tokens/sec inference on fashionable GPUs. The compact fashions additionally devour much less reminiscence: for instance, the 3B mannequin takes a couple of GB on disk, the 8B round 5 GB, and the 14B round 9 GB (non-quantized).

{Hardware} Specs

We verified {that a} 16GB GPU gives enough efficiency for Mistral 3B. The 8B mannequin typically requires about 32GB of RAM and 8GB of GPU reminiscence; nonetheless, it might run with 4-bit quantization on a 6–8GB GPU. Many cases of the 14B mannequin usually require a top-tier or flagship-grade graphics card (e.g., 24GB VRAM) and/or a number of GPUs. CPU-only variations of small fashions might run utilizing quantized inference, though GPUs stay the quickest choice.

Conclusion

Mistral 3 stands out as a quick, succesful, and accessible open-source mannequin that performs nicely throughout reasoning, coding, and real-world duties. Its small variants run domestically with ease, and the bigger fashions ship aggressive accuracy at decrease value. Whether or not you’re a developer, researcher, or AI fanatic, strive Mistral 3 your self and see the way it matches into your workflow.

Login to proceed studying and revel in expert-curated content material.