AI-generated pictures are in every single place. They’re displaying up in locations the place accuracy truly issues — information feeds, job functions, authorized paperwork, even identification verification. And when you’re previous the “wow, that appears actual” part, the subsequent query turns into way more sensible: how do you reliably inform what’s faux?

That’s the place AI picture detectors are available. However not all of them are constructed for a similar viewers.

On this comparability, I’m taking a look at TruthScan and Actuality Defender, two instruments that method AI picture detection from very totally different angles. One is designed to be accessible and instantly usable. The opposite is constructed like enterprise safety infrastructure. Each are critical merchandise — simply aimed toward very totally different issues.

What’s TruthScan?

TruthScan positions itself as an entire AI detection suite, not only a single-purpose checker. Whereas this text focuses on its AI picture detection capabilities, that’s just one a part of a broader platform that additionally consists of:

- AI textual content detection

- Deepfake video evaluation

- Voice and audio detection

- Electronic mail rip-off detection

- Actual-time monitoring instruments

The frequent theme is velocity and accessibility. TruthScan is designed to work out of the field, with out requiring technical setup, customized integrations, or developer assets. You add content material, get a outcome, and transfer on.

For picture detection particularly, TruthScan focuses on figuring out whether or not a picture is AI-generated or manipulated — the type of query on a regular basis customers, journalists, college students, and content material moderators truly ask.

What’s Actuality Defender?

Actuality Defender is a completely totally different type of instrument.

It’s an enterprise-grade deepfake detection platform, constructed primarily for organizations that want steady, large-scale safety in opposition to artificial media. Banks, governments, protection organizations, and main tech firms — that’s the viewers right here.

Let’s Discuss Accessibility

TruthScan is constructed for direct use. You don’t want technical data. You don’t want an engineering group. You don’t want to consider deployment environments or SDKs. You add a picture and get a solution.

Actuality Defender, however, is deliberately not constructed that manner.

- That’s not a flaw — it’s a design selection. Actuality Defender is optimized for:

- Actual-time safety inside present platforms

- Automated moderation at huge scale

- Integration into inside safety pipelines

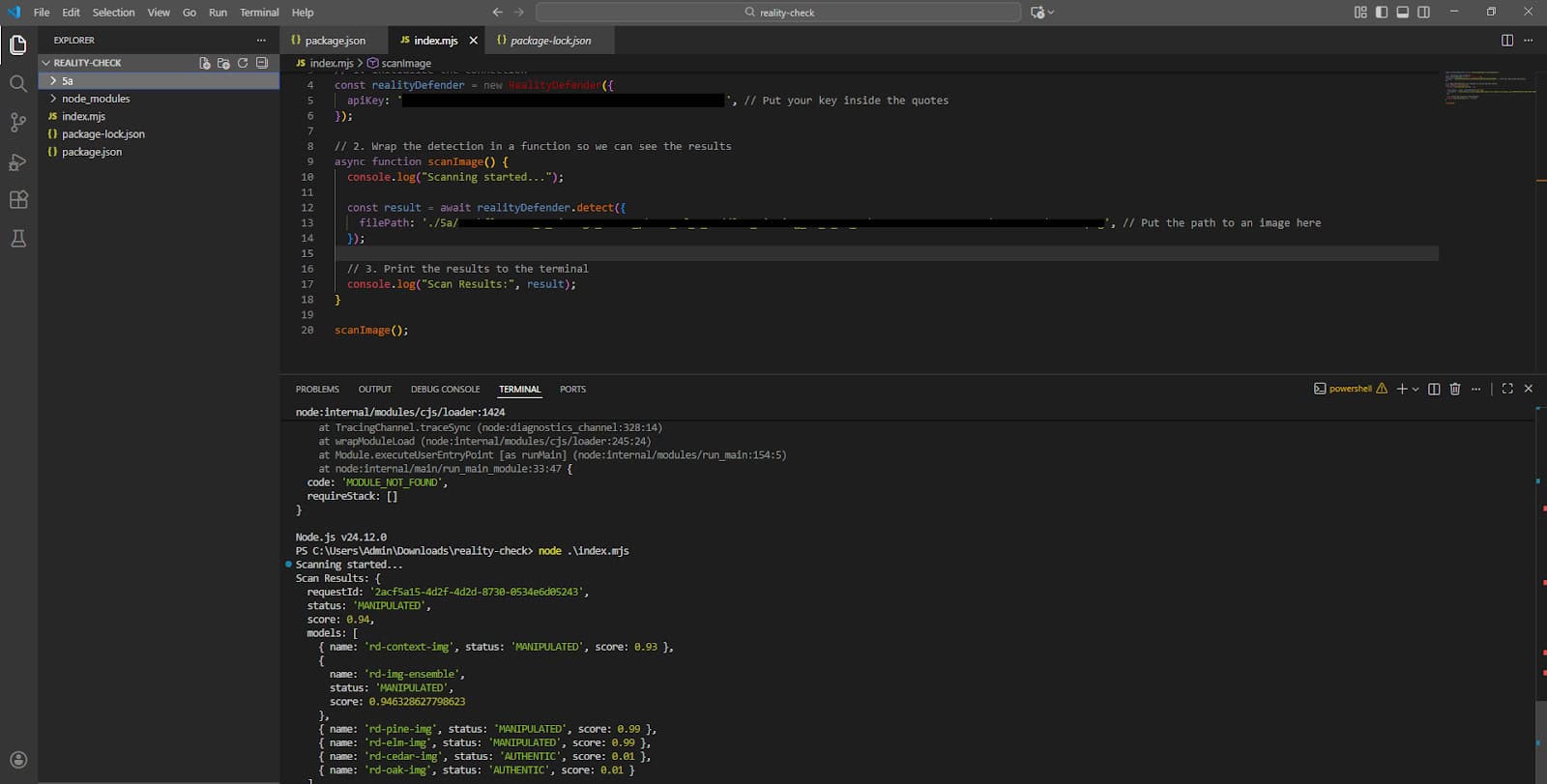

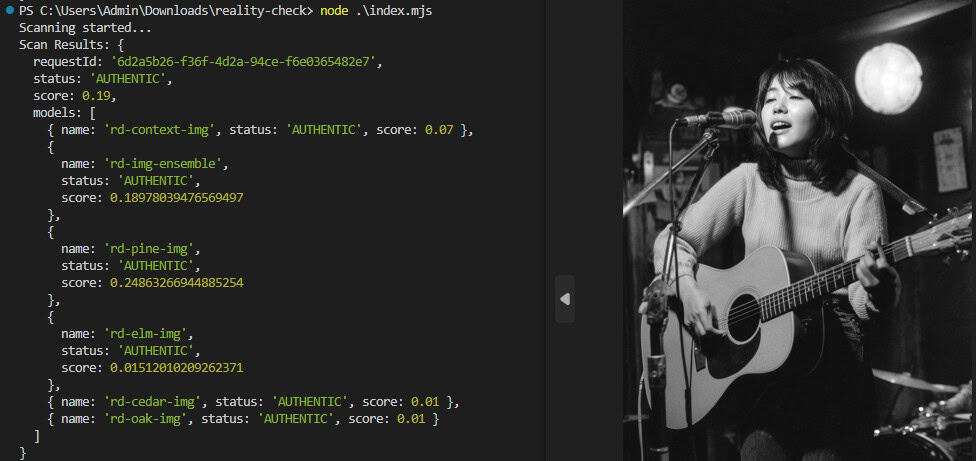

However for particular person creators, researchers, or journalists, that very same energy turns into a barrier. And not using a third-party interface, Actuality Defender is solely inaccessible to most individuals. To make use of this instrument, you’ll must:

- Have fundamental data of nodes.

- Have labored with APIs earlier than.

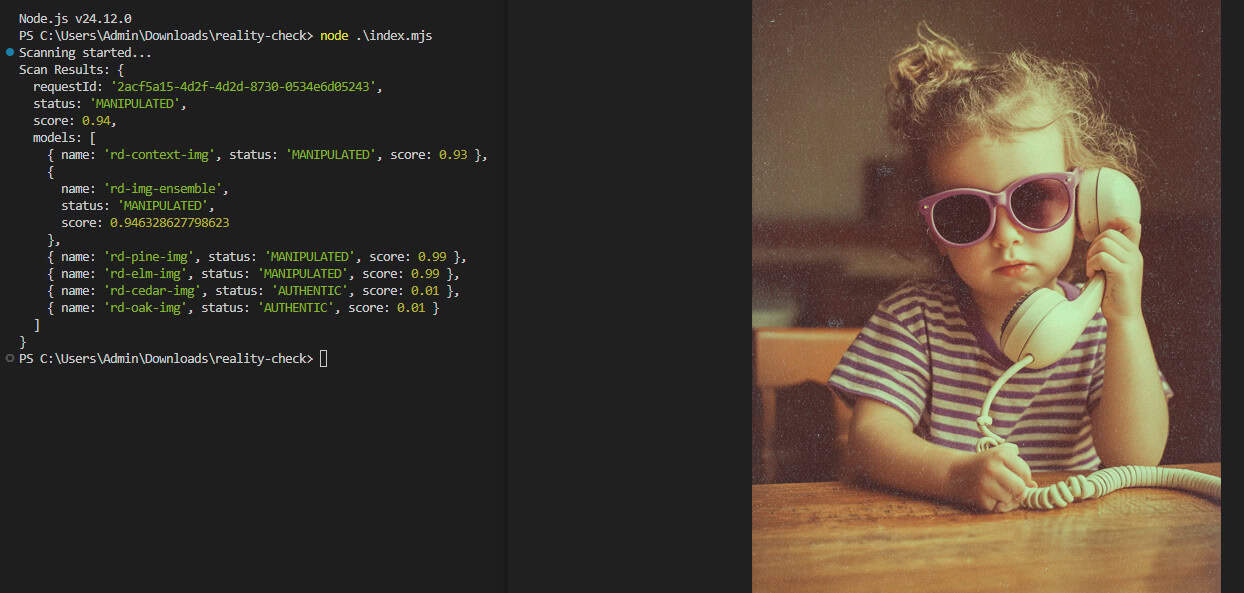

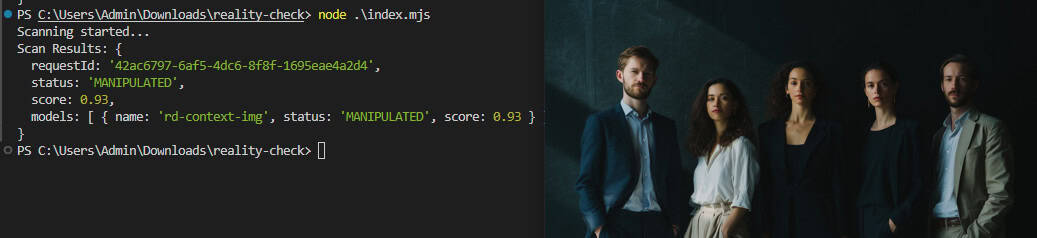

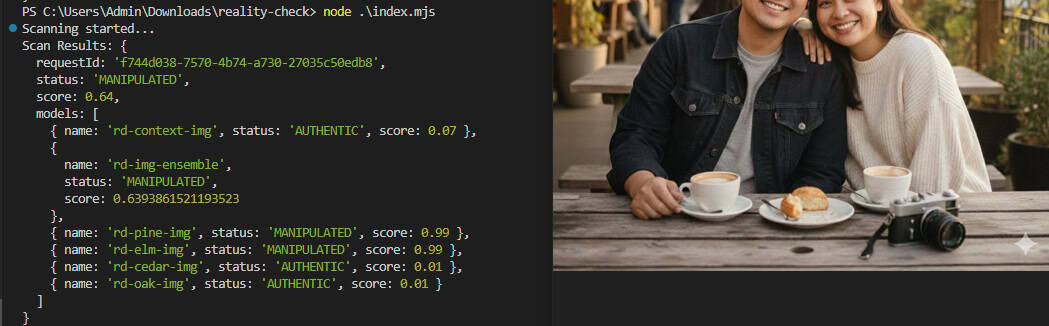

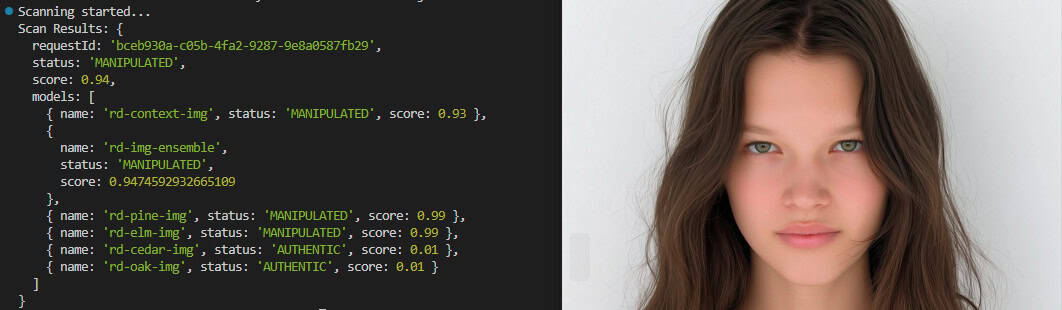

For reference, that is what my display seemed like testing the instrument.

Pretty easy for builders, however utterly alien to laypeople.

So whereas each instruments detect AI pictures, they’re fixing very totally different issues.

TruthScan vs. Actuality Defender: AI Detection

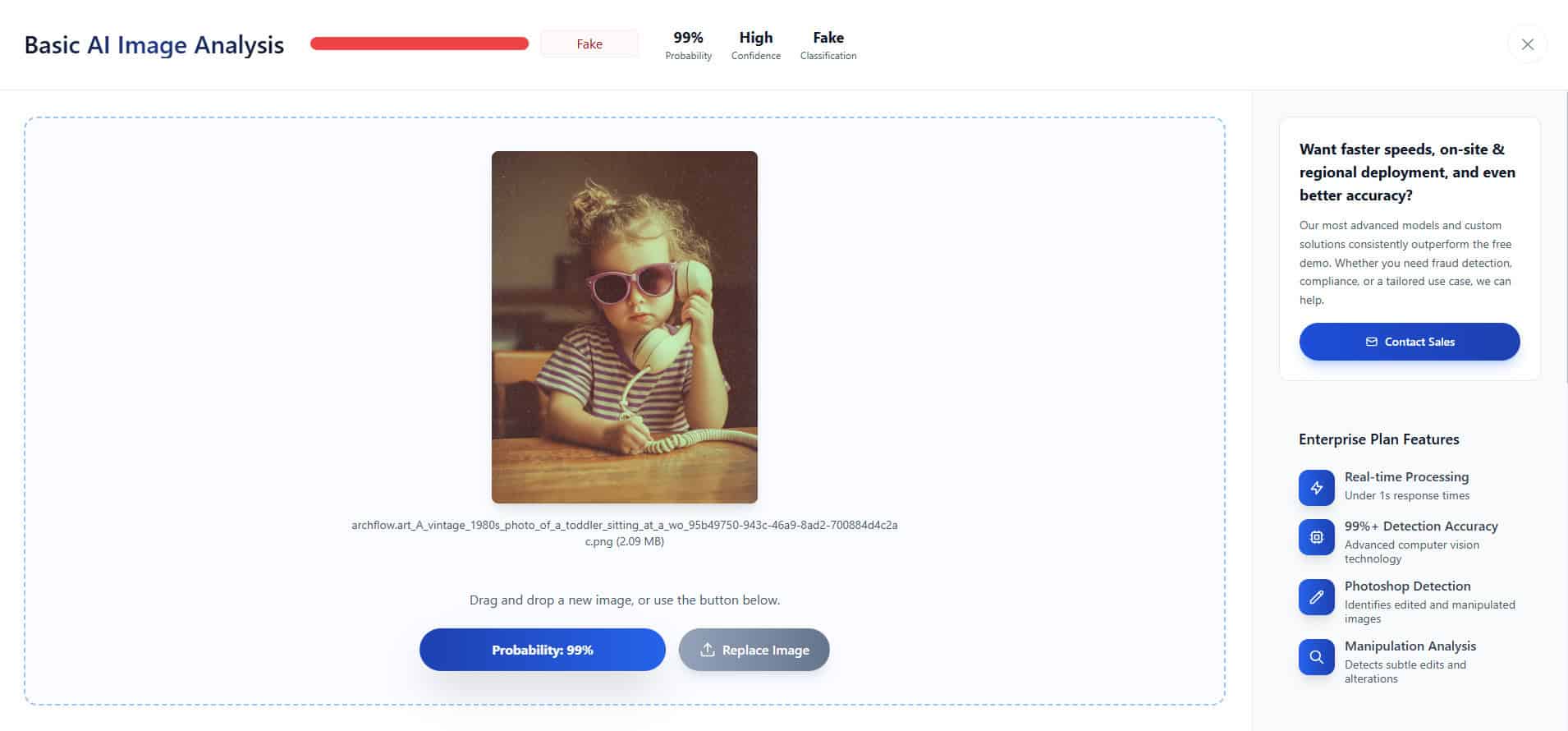

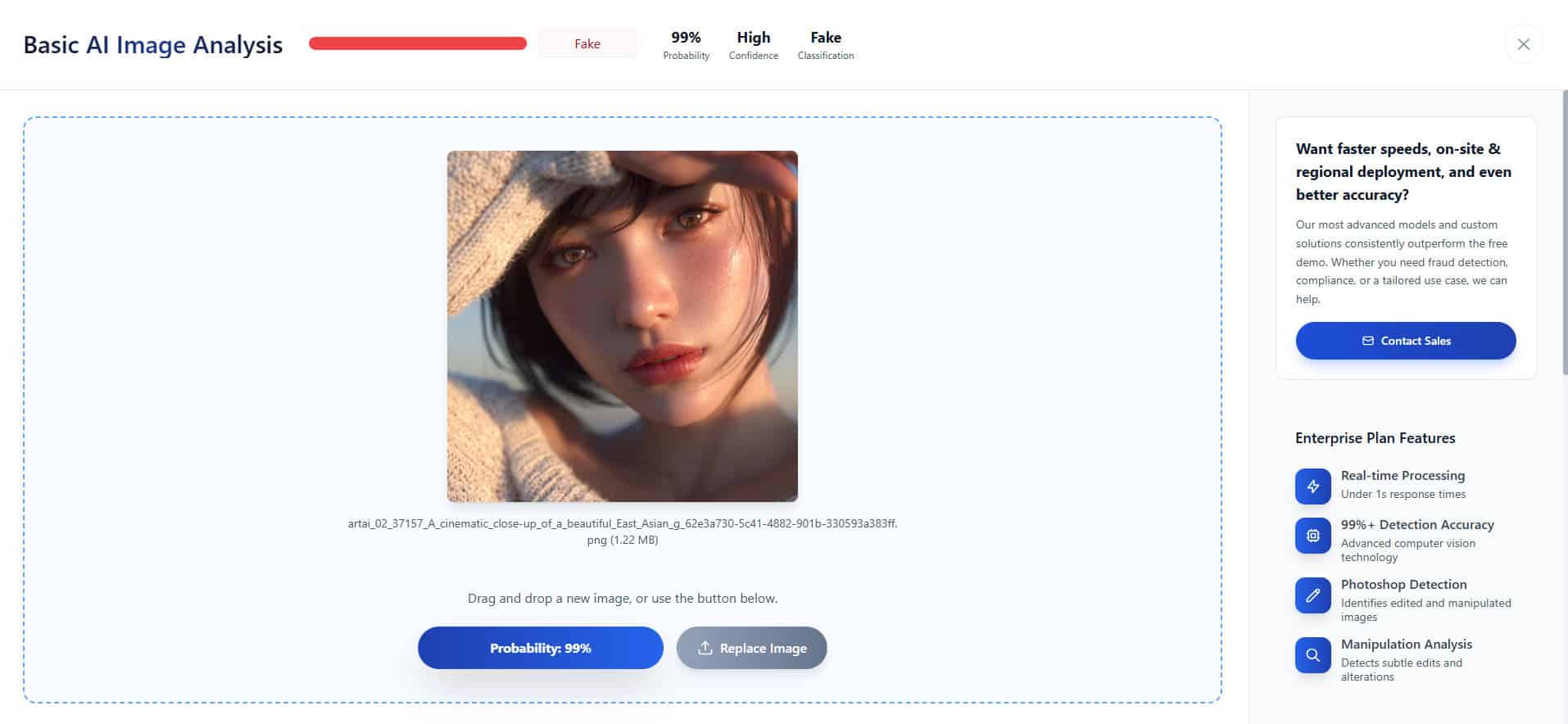

Take a look at #1

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Accurately categorised picture as AI-generated.

Confidence Rating: 94%

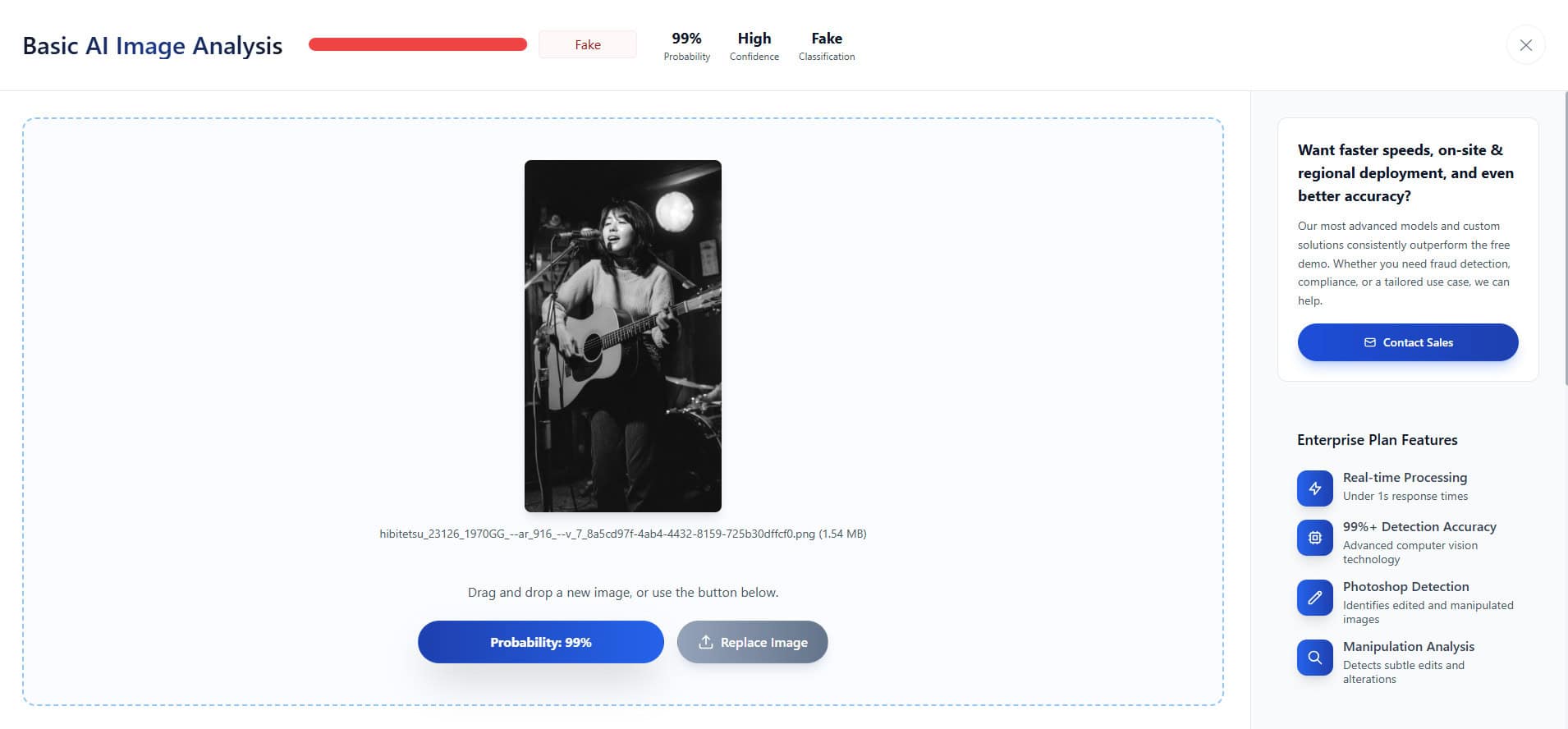

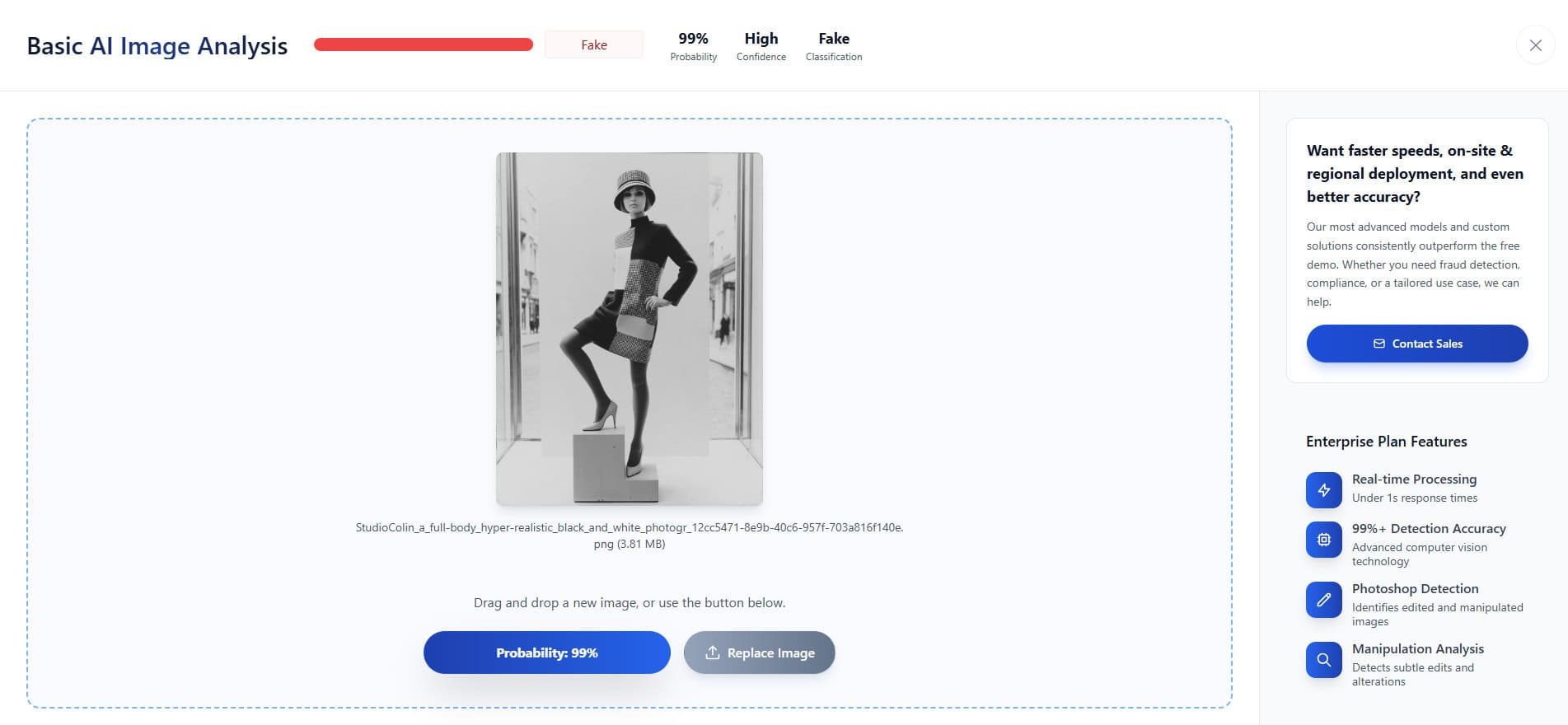

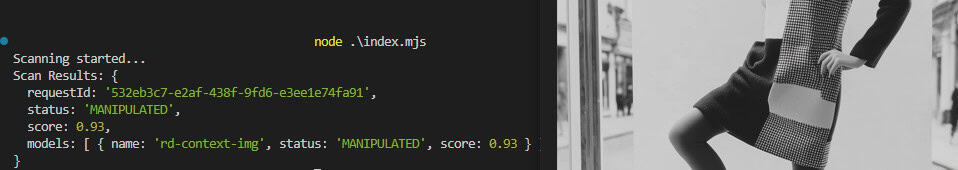

Take a look at #2

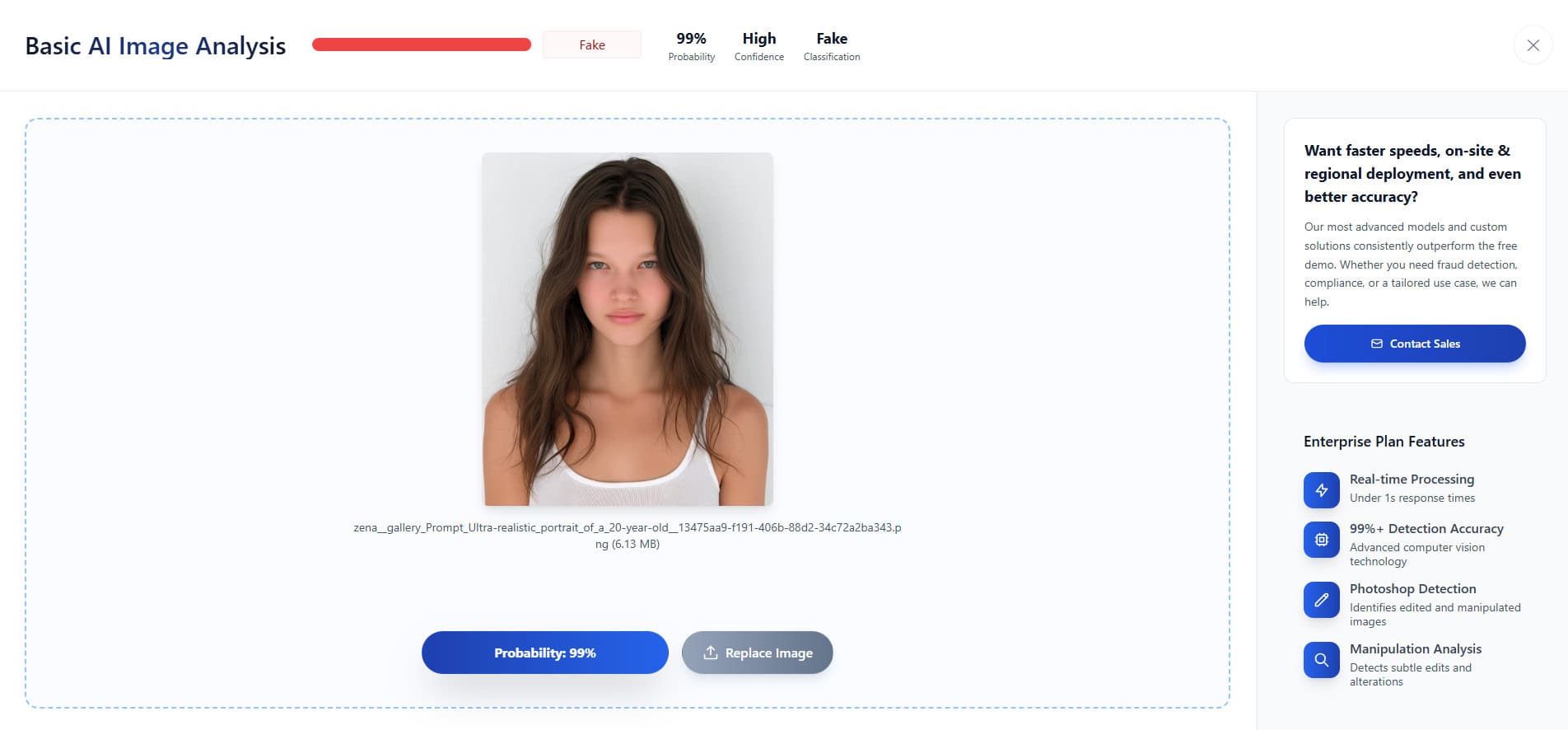

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Accurately categorised picture as AI-generated.

Confidence Rating: 93%

Take a look at #3

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Incorrect categorised picture as human-created.

Confidence Rating: 19%

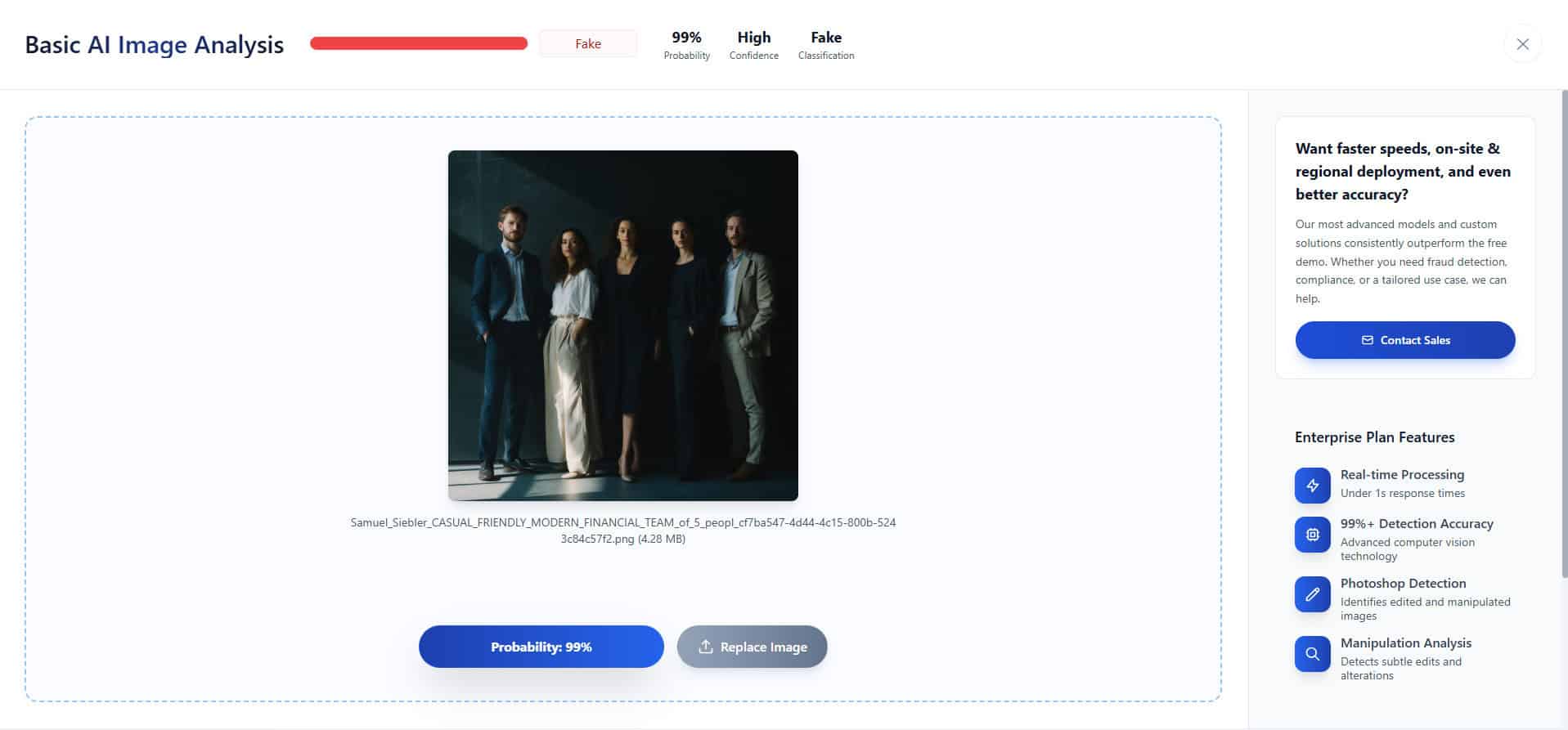

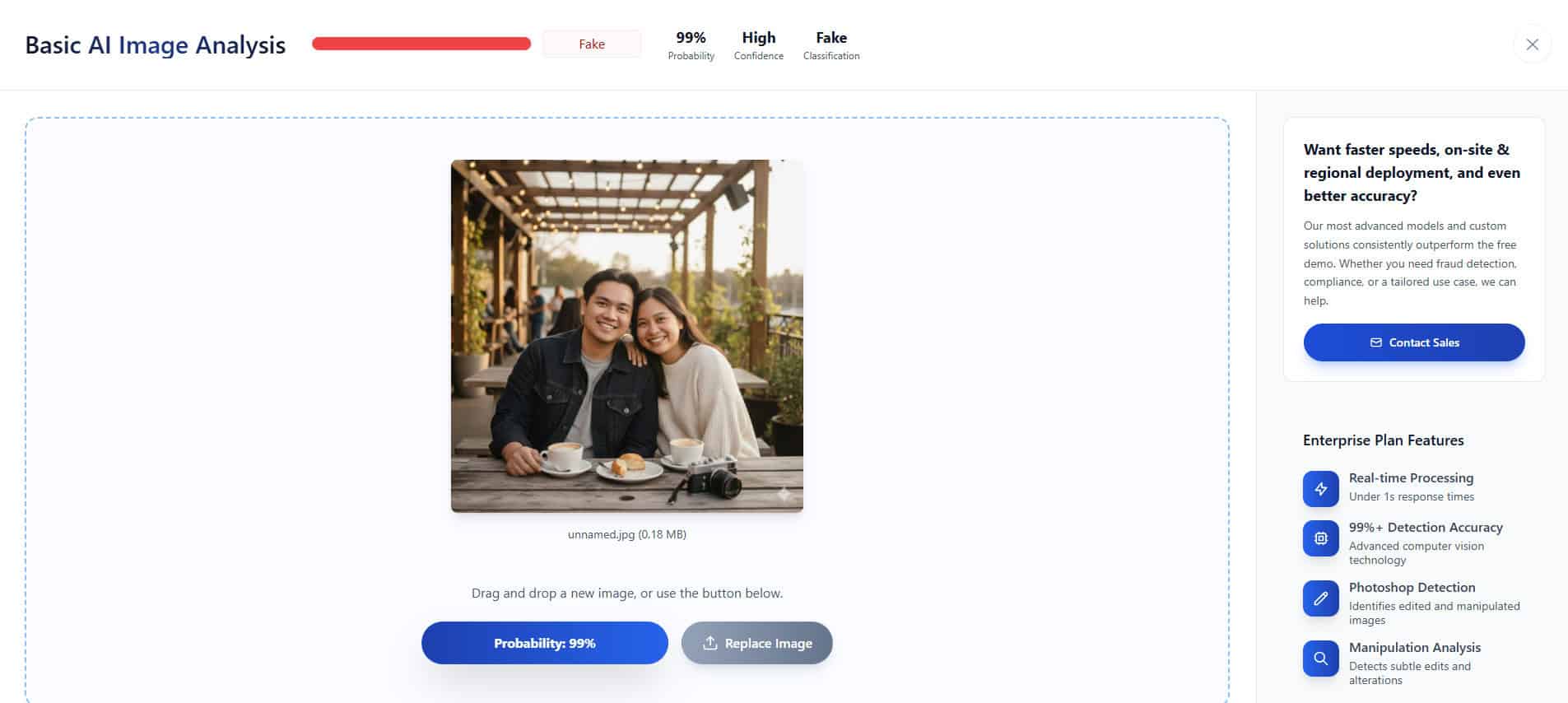

Take a look at #4

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Accurately categorised picture as AI-generated.

Confidence Rating: 93%

Take a look at #5

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Accurately categorised picture as AI-generated.

Confidence Rating: 93%

Take a look at #6

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Accurately categorised picture as AI-generated.

Confidence Rating: 64%

Take a look at #6

TruthScan: Accurately categorised picture as AI-generated.

Confidence Rating: 99%

Actuality Defender: Accurately categorised picture as AI-generated.

Confidence Rating: 94%

Common Rating

The Backside Line

TruthScan clocked in at a 99% correctness charge, which is an exceptionally robust outcome for AI picture detection — particularly in a panorama the place turbines are evolving quick and visible artifacts are getting tougher to identify. At that degree of accuracy, TruthScan isn’t simply “adequate”; it’s dependable in a manner that truly builds belief over repeated use.

Actuality Defender landed at 78.57%, which remains to be a stable efficiency, significantly given its enterprise-focused, probabilistic method. In high-security environments, that type of sign is commonly mixed with extra context, human evaluation, and layered defenses — and in that setting, Actuality Defender nonetheless completely is smart.

However once you take a look at uncooked picture detection accuracy alone, TruthScan clearly comes out forward.

What makes this outcome much more notable is how TruthScan delivers it. You don’t want an API, a improvement group, or a customized integration. You simply add a picture and get a solution — and on this case, that reply was appropriate nearly each time.

Actuality Defender stays a powerful piece of infrastructure for organizations securing large-scale methods. But when the query is who detects AI pictures extra precisely in observe, the testing factors decisively towards TruthScan.