AI fashions that play video games return a long time, however they typically specialise in one recreation and at all times play to win. Google DeepMind researchers have a distinct objective with their newest creation: a mannequin that realized to play a number of 3D video games like a human, but in addition does its finest to grasp and act in your verbal directions.

There are in fact “AI” or pc characters that may do this sort of factor, however they’re extra like options of a recreation: NPCs that you should utilize formal in-game instructions to not directly management.

DeepMind’s SIMA (scalable instructable multiworld agent) doesn’t have any form of entry to the sport’s inside code or guidelines; as a substitute, it was skilled on many, many hours of video displaying gameplay by people. From this information — and the annotations supplied by information labelers — the mannequin learns to affiliate sure visible representations of actions, objects and interactions. Additionally they recorded movies of gamers instructing each other to do issues in recreation.

For instance, it’d be taught from how the pixels transfer in a sure sample on display that that is an motion referred to as “transferring ahead,” or when the character approaches a door-like object and makes use of the doorknob-looking object, that’s “opening” a “door.” Easy issues like that, duties or occasions that take a number of seconds however are extra than simply urgent a key or figuring out one thing.

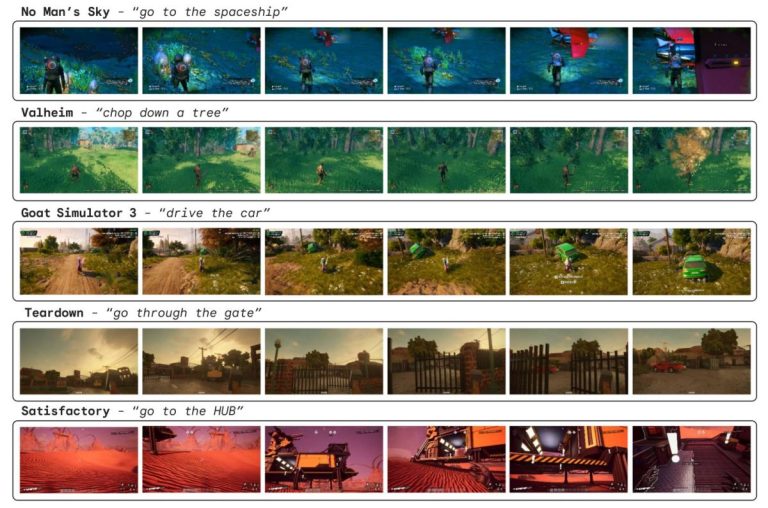

The coaching movies have been taken in a number of video games, from Valheim to Goat Simulator 3, the builders of which have been concerned with and consenting to this use of their software program. One of many fundamental objectives, the researchers stated in a name with press, was to see whether or not coaching an AI to play one set of video games makes it able to taking part in others it hasn’t seen, a course of referred to as generalization.

The reply is sure, with caveats. AI brokers skilled on a number of video games carried out higher on video games they hadn’t been uncovered to. However in fact many video games contain particular and distinctive mechanics or phrases that may stymie the best-prepared AI. However there’s nothing stopping the mannequin from studying these besides a scarcity of coaching information.

That is partly as a result of, though there may be a lot of in-game lingo, there actually are solely so many “verbs” gamers have that basically have an effect on the sport world. Whether or not you’re assembling a lean-to, pitching a tent or summoning a magical shelter, you’re actually “constructing a home,” proper? So this map of a number of dozen primitives the agent at the moment acknowledges is basically fascinating to peruse:

The researchers’ ambition, on high of advancing the ball in agent-based AI essentially, is to create a extra pure game-playing companion than the stiff, hard-coded ones now we have at the moment.

“Reasonably than having a superhuman agent you play towards, you possibly can have SIMA gamers beside you which might be cooperative, which you can give directions to,” stated Tim Harley, one of many challenge’s leads.

Since after they’re taking part in, all they see is the pixels of the sport display, they should learn to do stuff in a lot the identical method we do — nevertheless it additionally means they will adapt and produce emergent behaviors as properly.

Chances are you’ll be curious how this stacks up towards a standard methodology of constructing agent-type AIs, the simulator strategy, through which a largely unsupervised mannequin experiments wildly in a 3D simulated world working far sooner than actual time, permitting it to be taught the principles intuitively and design behaviors round them with out practically as a lot annotation work.

“Conventional simulator-based agent coaching makes use of reinforcement studying for coaching, which requires the sport or atmosphere to offer a ‘reward’ sign for the agent to be taught from — for instance win/loss within the case of Go or Starcraft, or ‘rating’ for Atari,” Harley advised Trendster, and famous that this strategy was used for these video games and produced phenomenal outcomes.

“Within the video games that we use, such because the business video games from our companions,” he continued, “We do not need entry to such a reward sign. Furthermore, we’re serious about brokers that may do all kinds of duties described in open-ended textual content – it’s not possible for every recreation to guage a ‘reward’ sign for every doable objective. As a substitute, we prepare brokers utilizing imitation studying from human conduct, given objectives in textual content.”

In different phrases, having a strict reward construction can restrict the agent in what it pursues, since whether it is guided by rating it’ll by no means try something that doesn’t maximize that worth. But when it values one thing extra summary, like how shut its motion is to 1 it has noticed working earlier than, it may be skilled to “need” to do nearly something so long as the coaching information represents it in some way.

Different firms are wanting into this sort of open-ended collaboration and creation as properly; conversations with NPCs are being checked out fairly arduous as alternatives to place an LLM-type chatbot to work, as an example. And easy improvised actions or interactions are additionally being simulated and tracked by AI in some actually fascinating analysis into brokers.

In fact there are additionally the experiments into infinite video games like MarioGPT, however that’s one other matter totally.