Introduction

The flexibility to remodel a single picture into an in depth 3D mannequin has lengthy been a pursuit within the subject of laptop imaginative and prescient and generative AI. Stability AI’s TripoSR marks a big leap ahead on this quest, providing a revolutionary strategy to 3D reconstruction from photographs. It empowers researchers, builders, and creatives with unparalleled pace and accuracy in reworking 2D visuals into immersive 3D representations. Furthermore, the modern mannequin opens up a myriad of functions throughout various fields, from laptop graphics and digital actuality to robotics and medical imaging. On this article, we’ll delve into the structure, working, options, and functions of Stability AI’s TripoSR mannequin.

What’s TripoSR?

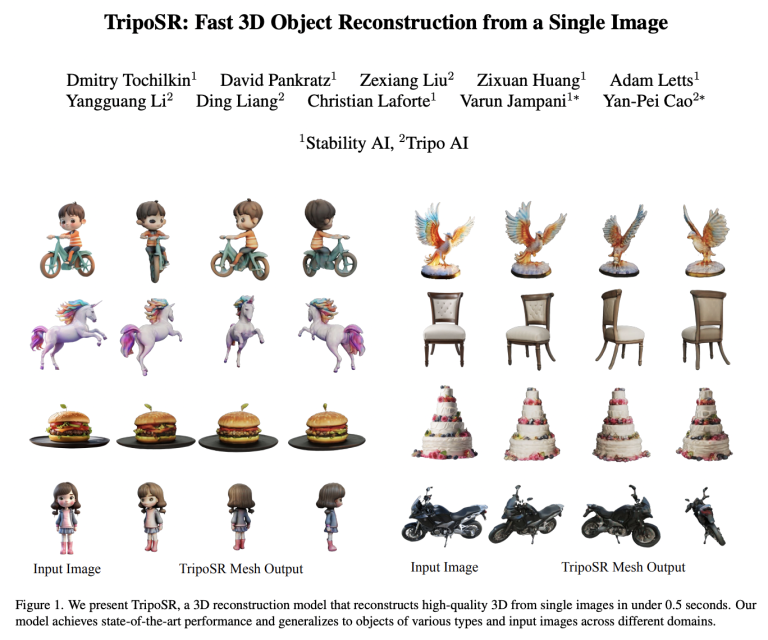

TripoSR is a 3D reconstruction mannequin that leverages transformer structure for quick feed-forward 3D technology, producing 3D mesh from a single picture in below 0.5 seconds. It’s constructed upon the LRM community structure and integrates substantial enhancements in knowledge processing, mannequin design, and coaching strategies. The mannequin is launched below the MIT license, aiming to empower researchers, builders, and creatives with the newest developments in 3D generative AI.

LRM Structure of Stability AI’s TripoSR

Much like LRM, TripoSR leverages the transformer structure and is particularly designed for single-image 3D reconstruction. It takes a single RGB picture as enter and outputs a 3D illustration of the item within the picture. The core of TripoSR contains three parts: a picture encoder, an image-to-triplane decoder, and a triplane-based neural radiance subject (NeRF). Let’s perceive every of those parts clearly.

Picture Encoder

The picture encoder is initialized with a pre-trained imaginative and prescient transformer mannequin, DINOv1. This mannequin initiatives an RGB picture right into a set of latent vectors encoding international and native options of the picture. These vectors include the mandatory data to reconstruct the 3D object.

Picture-to-Triplane Decoder

The image-to-triplane decoder transforms the latent vectors onto the triplane-NeRF illustration. This can be a compact and expressive 3D illustration appropriate for advanced shapes and textures. It consists of a stack of transformer layers, every with a self-attention layer and a cross-attention layer. This permits the decoder to take care of totally different components of the triplane illustration and be taught the relationships between them.

Triplane-based Neural Radiance Area (NeRF)

The triplane-based NeRF mannequin contains a stack of multilayer perceptrons answerable for predicting the colour and density of a 3D level in area. This part performs an important position in precisely representing the 3D object’s form and texture.

How These Parts Work Collectively?

The picture encoder captures the worldwide and native options of the enter picture. These are then remodeled into the triplane-NeRF illustration by the image-to-triplane decoder. The NeRF mannequin additional processes this illustration to foretell the colour and density of 3D factors in area. By integrating these parts, TripoSR achieves quick feed-forward 3D technology with excessive reconstruction high quality and computational effectivity.

TripoSR’s Technical Developments

Within the pursuit of enhancing 3D generative AI, TripoSR introduces a number of technical developments geared toward empowering effectivity and efficiency. These developments embrace knowledge curation strategies for enhanced coaching, rendering strategies for optimized reconstruction high quality, and mannequin configuration changes for balancing pace and accuracy. Let’s discover these additional.

Information Curation Methods for Enhanced Coaching

TripoSR incorporates meticulous knowledge curation strategies to bolster the standard of coaching knowledge. By selectively curating a subset of the Objaverse dataset below the CC-BY license, the mannequin ensures that the coaching knowledge is of top of the range. This deliberate curation course of goals to reinforce the mannequin’s skill to generalize and produce correct 3D reconstructions. Moreover, the mannequin leverages a various array of knowledge rendering strategies to intently emulate real-world picture distributions. This additional augments its capability to deal with a variety of situations and produce high-quality reconstructions.

Rendering Methods for Optimized Reconstruction High quality

To optimize reconstruction high quality, TripoSR employs rendering strategies that stability computational effectivity and reconstruction granularity. Throughout coaching, the mannequin renders 128 × 128-sized random patches from unique 512 × 512 decision photographs. Concurrently, it successfully manages computational and GPU reminiscence masses. Moreover, TripoSR implements an necessary sampling technique to emphasise foreground areas, guaranteeing devoted reconstructions of object floor particulars. These rendering strategies contribute to the mannequin’s skill to supply high-quality 3D reconstructions whereas sustaining computational effectivity.

Mannequin Configuration Changes for Balancing Pace and Accuracy

In an effort to stability pace and accuracy, TripoSR makes strategic mannequin configuration changes. The mannequin forgoes express digicam parameter conditioning, permitting it to “guess” digicam parameters throughout coaching and inference. This strategy enhances the mannequin’s adaptability and resilience to real-world enter photographs, eliminating the necessity for exact digicam data.

Moreover, TripoSR additionally introduces technical enhancements within the variety of layers within the transformer and the size of the triplanes. The specifics of the NeRF mannequin and the principle coaching configurations have additionally been improved. These changes contribute to the mannequin’s skill to realize fast 3D mannequin technology with exact management over the output fashions.

TripoSR’s Efficiency on Public Datasets

Now let’s consider TripoSR’s efficiency on public datasets by using a spread of analysis metrics, and evaluating its outcomes with state-of-the-art strategies.

Analysis Metrics for 3D Reconstruction

To evaluate the efficiency of TripoSR, we make the most of a set of analysis metrics for 3D reconstruction. We curate two public datasets, GSO and OmniObject3D, for evaluations, guaranteeing a various and consultant assortment of frequent objects.

The analysis metrics embrace Chamfer Distance (CD) and F-score (FS), that are calculated by extracting the isosurface utilizing Marching Cubes to transform implicit 3D representations into meshes. Moreover, we make use of a brute-force search strategy to align the predictions with the bottom reality shapes, optimizing for the bottom CD. These metrics allow a complete evaluation of TripoSR’s reconstruction high quality and accuracy.

Evaluating TripoSR with State-of-the-Artwork Strategies

We quantitatively evaluate TripoSR with present state-of-the-art baselines on 3D reconstruction that use feed-forward strategies, together with One-2-3-45, TriplaneGaussian (TGS), ZeroShape, and OpenLRM. The comparability reveals that TripoSR considerably outperforms all of the baselines when it comes to CD and FS metrics, attaining new state-of-the-art efficiency on this job.

Moreover, we current a 2D plot of various strategies with inference instances alongside the x-axis and the averaged F-Rating alongside the y-axis. This demonstrates that TripoSR is among the many quickest networks whereas additionally being the best-performing feed-forward 3D reconstruction mannequin.

Quantitative and Qualitative Outcomes

The quantitative outcomes showcase TripoSR’s distinctive efficiency, with F-Rating enhancements throughout totally different thresholds, together with [email protected], [email protected], and [email protected]. These metrics display TripoSR’s skill to realize excessive precision and accuracy in 3D reconstruction. Moreover, the qualitative outcomes, as depicted in Determine 3, present a visible comparability of TripoSR’s output meshes with different state-of-the-art strategies on GSO and OmniObject3D datasets.

The visible comparability highlights TripoSR’s considerably greater high quality and higher particulars in reconstructed 3D shapes and textures in comparison with earlier strategies. These quantitative and qualitative outcomes display TripoSR’s superiority in 3D reconstruction.

The Way forward for 3D Reconstruction with TripoSR

TripoSR, with its quick feed-forward 3D technology capabilities, holds vital potential for varied functions throughout totally different fields. Moreover, ongoing analysis and growth efforts are paving the way in which for additional developments within the realm of 3D generative AI.

Potential Purposes of TripoSR in Numerous Fields

The introduction of TripoSR has opened up a myriad of potential functions in various fields. Within the area of AI, TripoSR’s skill to quickly generate high-quality 3D fashions from single photographs can considerably impression the event of superior 3D generative AI fashions. Moreover, in laptop imaginative and prescient, TripoSR’s superior efficiency in 3D reconstruction can improve the accuracy and precision of object recognition and scene understanding.

Within the subject of laptop graphics, TripoSR’s functionality to supply detailed 3D objects from single photographs can revolutionize the creation of digital environments and digital content material. Furthermore, within the broader context of AI and laptop imaginative and prescient, TripoSR’s effectivity and efficiency can probably drive progress in functions akin to robotics, augmented actuality, digital actuality, and medical imaging.

Ongoing Analysis and Growth for Additional Developments

The discharge of TripoSR below the MIT license has sparked ongoing analysis and growth efforts geared toward additional advancing 3D generative AI. Researchers and builders are actively exploring methods to reinforce TripoSR’s capabilities, together with enhancing its effectivity, increasing its applicability to various domains, and refining its reconstruction high quality.

Moreover, ongoing efforts are centered on optimizing TripoSR for real-world situations, guaranteeing its robustness and flexibility to a variety of enter photographs. Moreover, the open-source nature of TripoSR has fostered collaborative analysis initiatives, driving the event of modern strategies and methodologies for 3D reconstruction.

These ongoing analysis and growth endeavors are poised to propel TripoSR to new heights, solidifying its place as a number one mannequin within the subject of 3D generative AI.

Conclusion

TripoSR’s outstanding achievement in producing high-quality 3D fashions from a single picture in below 0.5 seconds is a testomony to the fast developments in generative AI. By combining state-of-the-art transformer architectures, meticulous knowledge curation strategies, and optimized rendering approaches, TripoSR has set a brand new benchmark for feed-forward 3D reconstruction.

As researchers and builders proceed to discover the potential of this open-source mannequin, the way forward for 3D generative AI seems brighter than ever. Its functions span various domains, from laptop graphics and digital environments to robotics and medical imaging, promising exponential progress sooner or later. Therefore, TripoSR is poised to drive innovation and unlock new frontiers in fields the place 3D visualization and reconstruction play an important position.

Beloved studying this? You possibly can discover many extra such AI instruments and their functions right here.