If you happen to add movies to YouTube, you’ll now should fess up should you used AI to create a realistic-looking video. In a Monday weblog put up, YouTube stated that the Creator Studio software will show labels in sure areas, requiring you to reveal if the content material was made utilizing “artificial media” (that means generative AI instruments).

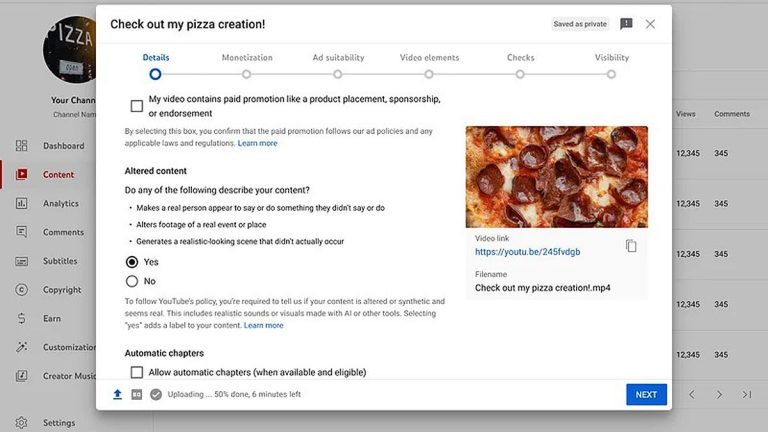

Constructed into Creator Studio for each YouTube’s web site and its cell apps, the AI affirmation labels will seem within the expanded description or on the video participant itself. You may then must test a field or in any other case point out if the content material makes an actual individual seem to say or do one thing they did not say or do, alters footage of an actual occasion or place, or generates a realistic-looking scene that did not truly happen. A label for significantly delicate content material might even seem on the video itself.

First introduced in November 2023, the coverage applies solely to realistic-looking video content material that may very well be misconstrued for actual individuals, locations, or occasions. Some examples cited within the weblog embrace:

- Utilizing the likeness of a practical individual. Digitally changing the face of 1 individual with that of one other or artificially creating an individual’s voice to relate a video.

- Altering footage of actual occasions or locations. Making it appear like an actual constructing is on fireplace or altering an actual location in a metropolis to make it look totally different than in actuality.

- Producing lifelike scenes. Depicting fictional main occasions, resembling a twister transferring towards an actual city.

Utilizing generative AI to create unbelievable or unrealistic scenes or to use particular results wouldn’t require a disclosure. Examples of such situations embrace:

- Animating a fantastical world

- Utilizing AI to regulate the colour or lighting filters

- Utilizing particular results resembling background blur or classic filters

- Making use of magnificence filters or different visible enhancements

Pretend movies on social media and elsewhere have lengthy triggered considerations of individuals being fooled into considering that what they see is actual. Generative AI has made the method for producing phony movies even simpler and thus extra prevalent. Worries are solely growing as we head right into a US presidential election the place pretend political movies can be utilized to affect voters.

Okay, so the brand new coverage sounds cheap. However what occurs if a YouTube creator merely would not adjust to the foundations?

Within the weblog put up, YouTube stated that the group will contemplate totally different enforcement measures for customers who persistently fail to reveal utilizing AI. In sure circumstances, YouTube may even add its personal label if the creator neglects to take action, particularly if the content material may confuse or mislead individuals.

Moreover, YouTube is aiming to replace its privateness coverage to assist individuals request that sure AI-generated content material be eliminated. In these conditions, people ought to be capable to ask to take away any synthetic content material that mimics their face or voice.